Nowadays, driverless technology is usually divided into three parts: environment perception and positioning, decision planning and motion control. Environment perception and positioning is responsible for determining where there are cars or pedestrians around the car, whether it is a red or green light in front, that is, determining the state of the environment and the car. The decision-making plan is responsible for what the car should do, whether to follow or go around, accelerate or slow down, and what kind of route is safe and efficient and relatively comfortable. Motion control must be electronically modified for the execution of traditional cars. After the decision commands and trajectories are given to the controller, actuators such as motors, steering, braking, etc. should follow the planned trajectory as quickly and with little deviation as the robust body can complete the instructions of the mind. Environmental perception is the ‘eyes’ of driverless

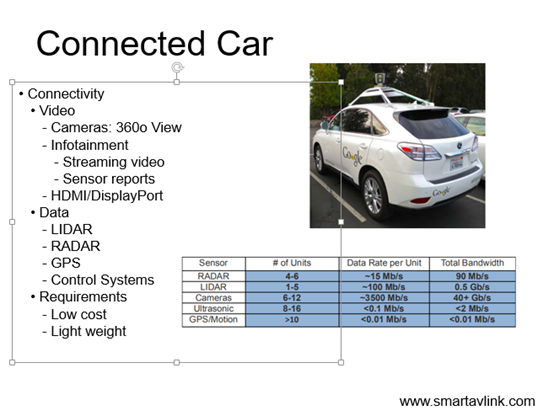

In a driverless car, the sensor constitutes a sensing module that replaces the driver’s receptor. Quickly and accurately obtaining environmental status information including the distance of the obstacle, the indication of the traffic light in front, the number on the speed limit sign, and the vehicle position, vehicle speed and other self-vehicle status is the guarantee for safe driving of the vehicle. Commonly used sensors for detecting environmental conditions include a camera, a laser radar, a millimeter wave radar, an ultrasonic sensor, etc., and sensors for determining the state of the vehicle include a GPS/inertial guide, a wheel speed sensor, and so on.

Driverless cars need multi-sensor division to work together

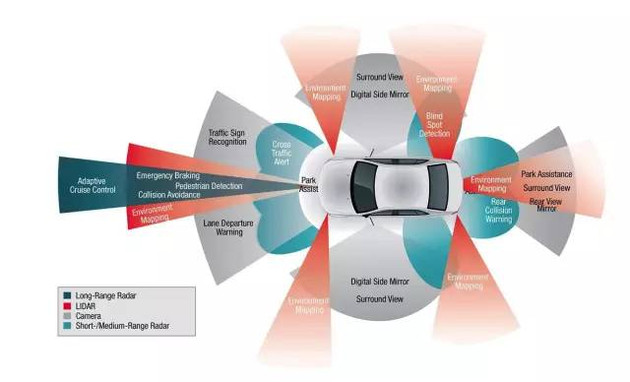

The camera can classify the obstacle according to the characteristics of the object. If it is necessary to obtain the depth information of the obstacle, two cameras are required, which is generally called binocular stereo vision. The two cameras of the dual purpose maintain a certain distance, like the binocular parallax of human beings, and calculate the offset between the pixels by triangulation to obtain the three-dimensional information of the object. In addition to helping the car determine its position and speed of travel, the main function of the binocular camera is to identify the lights and signal signs on the road to ensure that the autonomous driving follows the road traffic rules. However, the binocular camera is greatly affected by changes in weather conditions and lighting conditions, and the amount of calculation is also quite large, and the performance requirements of the computing unit are very high.

The radars commonly used in unmanned vehicles include laser radar, millimeter wave radar, and laser radar mainly detect the position and velocity of the target by emitting a laser beam. Lidar has a wider detection range and higher detection accuracy for distance and position. Therefore, it is widely used in obstacle detection, environmental three-dimensional information acquisition, vehicle distance maintenance, and vehicle obstacle avoidance. However, the lidar is susceptible to weather and has poor performance in rain, snow and fog. In addition, the more harnesses the laser emitters have, the more point clouds are collected per second and the better the detection performance. However, the more harnesses represent the more expensive Lidars, the price of a 64-beam LIDAR is 10 times that of a 16-beam bundle. Currently, Baidu and Google’s driverless cars are loaded with 64-line laser radar.

Schematic diagram of lidar point cloud

The millimeter wave radar has narrow beam, high resolution and strong anti-interference ability. The seeker has strong ability to penetrate fog, smoke and dust. It has better environmental adaptability to the laser radar, and weather conditions such as rain, fog or night have almost no effect on the transmission of millimeter waves. In addition, the guide head has the characteristics of small size, light weight and high spatial resolution. With the development of monolithic microwave integrated circuit technology, the price and size of millimeter wave radar have been greatly reduced. However, the detection range is directly constrained by the band loss, and it is impossible to perceive pedestrians, and it is impossible to accurately model all obstacles around.

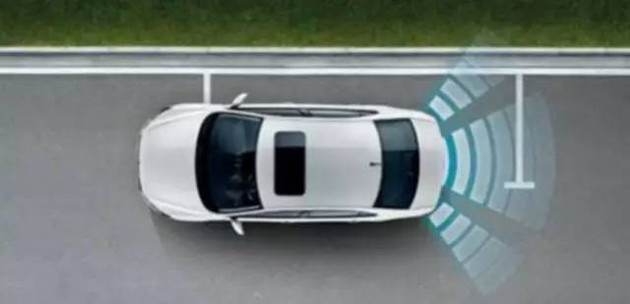

Ultrasonic sensor data processing is simple and fast, mainly used for close-range obstacle detection. Generally, the detected distance is about 1 to 5 meters, but detailed position information cannot be detected. In addition, when the car is driving at a high speed, the use of ultrasonic ranging cannot keep up with the real-time change of the car distance, and the error is large. On the other hand, the ultrasonic scattering angle is large and the directivity is poor. When measuring a target at a relatively long distance, the echo signal is relatively weak, which affects the measurement accuracy. However, in low-speed short-range measurements, ultrasonic ranging sensors have great advantages.

Ultrasonic sensor

GPS/INS and wheel speed sensors are primarily used to determine the position of the car itself, often merging their data to improve positioning accuracy.

Multi-sensor fusion is a very common algorithm for environment-aware modules. It can reduce the error. For example, the edge of the image often occurs in the depth discontinuity. The edge of the two-dimensional image (obtained by the camera) is extracted and the depth information given by the laser radar is co-pointed (co-point Mapping) can match the vanishing point of the road in the two-dimensional perspective image with the three-dimensional radar information, so that the road surface can be more accurately divided and the surrounding buildings are located.

In addition, the high-precision map is also a powerful support for automatic driving. If there is very accurate map information, you can directly plan the lane line with the map once, which can reduce the task of visually recognizing the lane line.

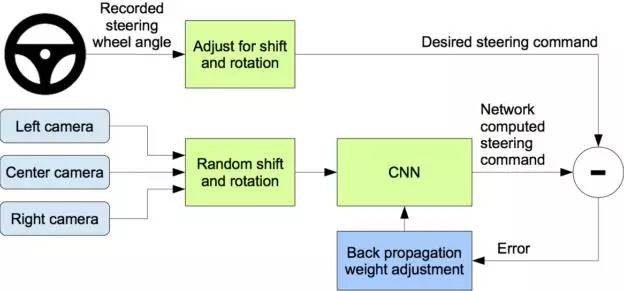

At present, the decision-making framework of the mainstream is divided into two algorithms based on expert algorithm-based decision-making and machine-learning-based decision-making, and the latter is increasingly being valued and studied. For example, NVIDIA uses the Convolutional Neural Network (CNN) to capture the original pixel map captured by the front camera through the trained convolutional neural network to output the direction command of the car. The driverless car can be driving on unstructured roads such as mountain roads, construction site and other roads, and these road conditions are difficult to exhaustive, so it is unrealistic to rely on traditional expert algorithms to determine the changing situation by conditional judgment.

NVIDIA’s learning framework is as follows:

The training data includes a single frame of video sampled from the video, along with corresponding directional control commands. The predicted direction control command is compared with the ideal control command, and then the weight of the CNN model is adjusted by the back propagation algorithm so that the predicted value is as far as possible to the ideal value. The trained model can generate direction control commands using the data of the camera dead ahead.

Compared to conventional controllers and actuators, unmanned vehicles prefer to use line-controlled actuators, such as steer-by-wire, line-controlled, and line-controlled drives, for precise control.

In the local path planning, after the self-driving car comprehensively considers the constraints such as the surrounding environment and the self-vehicle state, an ideal lane-changing path is planned, and the instructions are transmitted to the relevant executing agency. If the actuator fails to follow the path to the vehicle’s corner requirements, it will deviate from the planned path. So the algorithm of motion control is also crucial.

What is the future trend of driverless driving?

At present, the technical route of unmanned vehicles is mainly divided into two types. One is the self-vehicle sensor based on the information introduced in this paper to obtain various information, and the other is based on 5G communication technology to obtain the environment information through workshop communication, vehicle and infrastructure communication. In comparison, the former does not depend on the transformation of the infrastructure and the premise that if other cars on the market are intelligent, so it is easier to achieve. Although driverless has been able to navigate 99% of the road safely, the remaining 1% requires 99% of the engineer’s effort to solve. By 2020, smart cars should still be presented to consumers in the form of ADAS.